The last major hurdle we need to overcome before installing some Oracle software and building a RAC database is to configure shared storage.

Shared storage is the key to making Oracle RAC work. The same storage concurrently visible to and updatable from multiple servers really is the heart of the system.

Enterprise class dedicated SAN or NAS filers are very cool but very expensive. What we need is a scaled down economical solution which does the same thing. The answer? Openfiler!

Quick links to all the tasks:

- Task #1: Install Openfiler.

- Task #2: Configure Openfiler.

- Task #3: Configure iSCSI Targets in Oracle Linux.

- Task #4: Configure Oracle ASM.

Task #1: Install Openfiler.

Download the Openfiler 2.99.1 ISO and burn it to a DVD using ImgBurn. Then use the DVD to boot the shared storage server computer. Installing Openfiler is very simple and similar to installing Oracle Linux. Since we’re not installing Openfiler in a virtual environment, it’s a bit tricky to capture screen shots of a bare metal install. So I’ll have to rely on a text description. Fear not, this install is ridiculously easy and completes in 8 simple steps.

Task #1a: Press Enter to install in graphical mode.

After the server boots from DVD, simply hit the Enter key to get the install started.

Task #1b: Click Next.

Once the GUI comes up, just hit the Enter key to continue.

Task #1c: Select keyboard.

Select your desired keyboard layout from the menu and click Next.

Task #1d: Select installation drive.

The shared storage server has 4 drives in total, identified as sda, sdb, sdc and sdd:

| Device | Physical Drive | Usage |

|---|---|---|

| sda | Samsung 850 EVO 120GB 2.5-Inch SATA III Internal SSD | Openfiler OS |

| sdb | Western Digital 300 GB VelociRaptor SATA 3 Internal HD | Data |

| sdc | Western Digital 300 GB VelociRaptor SATA 3 Internal HD | Data |

| sdd | Samsung 850 EVO 120GB 2.5-Inch SATA III Internal SSD | Data |

Select the option to Remove all partitions on selected drives and create default layout.

Leave the first drive, sda, checked and uncheck all the other drives.

Finally, select sda as the boot installation drive and click Next.

Task #1e: Configure network devices.

The shared storage server has two NICs. Click Edit and configure them with these values:

| Interface | Field | Value |

|---|---|---|

| eth0 | IP Address | 200.200.10.6 |

| Prefix (Netmask) | 255.255.255.0 | |

| eth1 | IP Address | 200.200.20.6 |

| Prefix (Netmask) | 255.255.255.0 | |

Note, do not forget to disable IPv6 support and ensure both NICs are Active on Boot.

Set the remaining networking fields manually with these values, then click Next:

| Field | Value |

|---|---|

| Hostname | openfiler.mynet.com |

| Gateway | 200.200.10.1 |

| Primary DNS | 200.200.10.1 |

| Secondary DNS | 8.8.8.8 |

Task #1f: Choose timezone.

Select your preferred timezone from the menu. Leave System clock uses UTC checked. Click Next.

Task #1g: Choose root password.

Enter a root password twice. Write it down somewhere safe. Now! Don’t argue, just do it. Click Next.

Task #1h: Install the software.

A familiar looking left to right progress bar shows the installation’s progress. It only takes about a minute, then you’ll see a congratulations screen. Click Reboot.

Note, this reboot sometimes sticks at a completely blank screen. Don’t be alarmed. If this happens hard power off the machine, then power it back on and all will be well.

When the machine comes back up, it will display the URL for the Openfiler console, which in our case will be, https://200.200.10.6:446/. The default username is “openfiler” and the default password is, wait for it, “password”. No quotes of course.

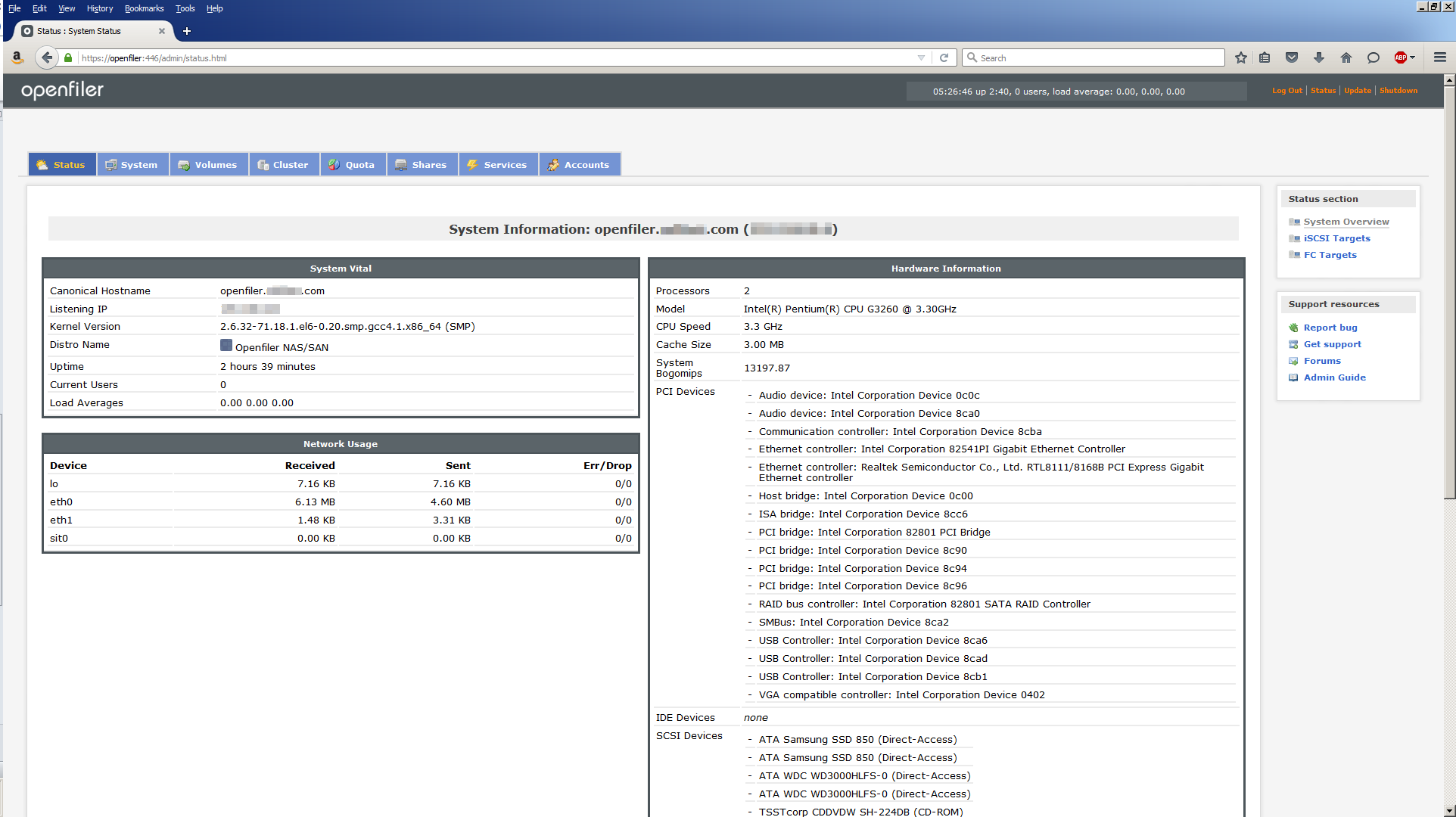

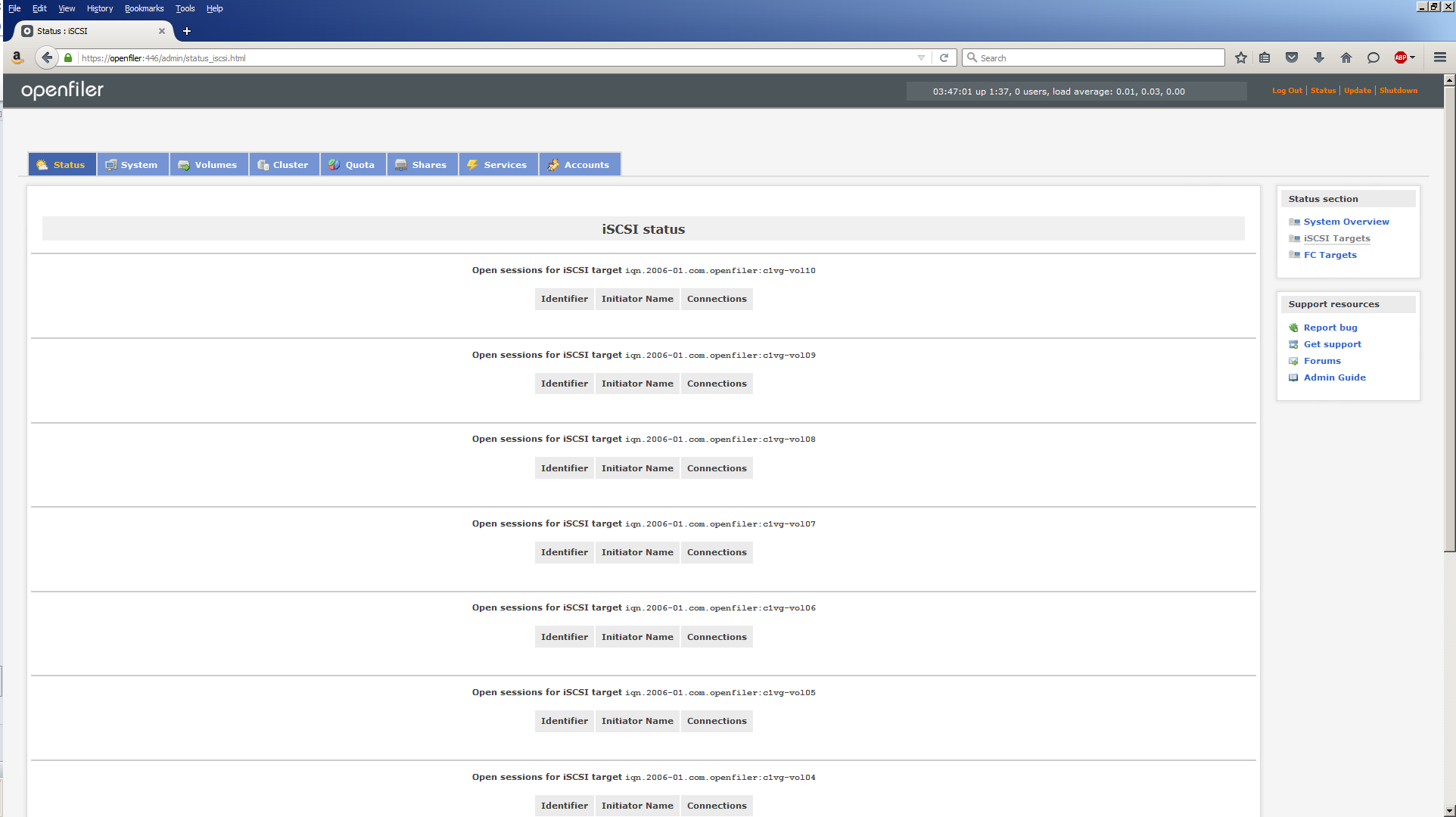

Once you get logged in, you’ll see a status screen similar to this:

Told you it was easy! ?

Task #2: Configure Openfiler.

Installing Openfiler was quite easy. Configuring the storage it manages is a little involved. There are 8 steps to this. Pay close attention. Maximum attention to detail is needed here!!!

Task #2a: Edit /etc/hosts.

Add the following to the openfiler server’s /etc/hosts file:

200.200.20.11 racnode1-storage 200.200.20.12 racnode2-storage

Then test network connectivity both ways from each racnode server:

[root@racnode1 ~]# ping -I eth1 openfiler-storage [root@racnode2 ~]# ping -I eth1 openfiler-storage [root@openfiler ~]# ping -I eth1 racnode1-storage [root@openfiler ~]# ping -I eth1 racnode2-storage

Task #2b: Enable iSCSI.

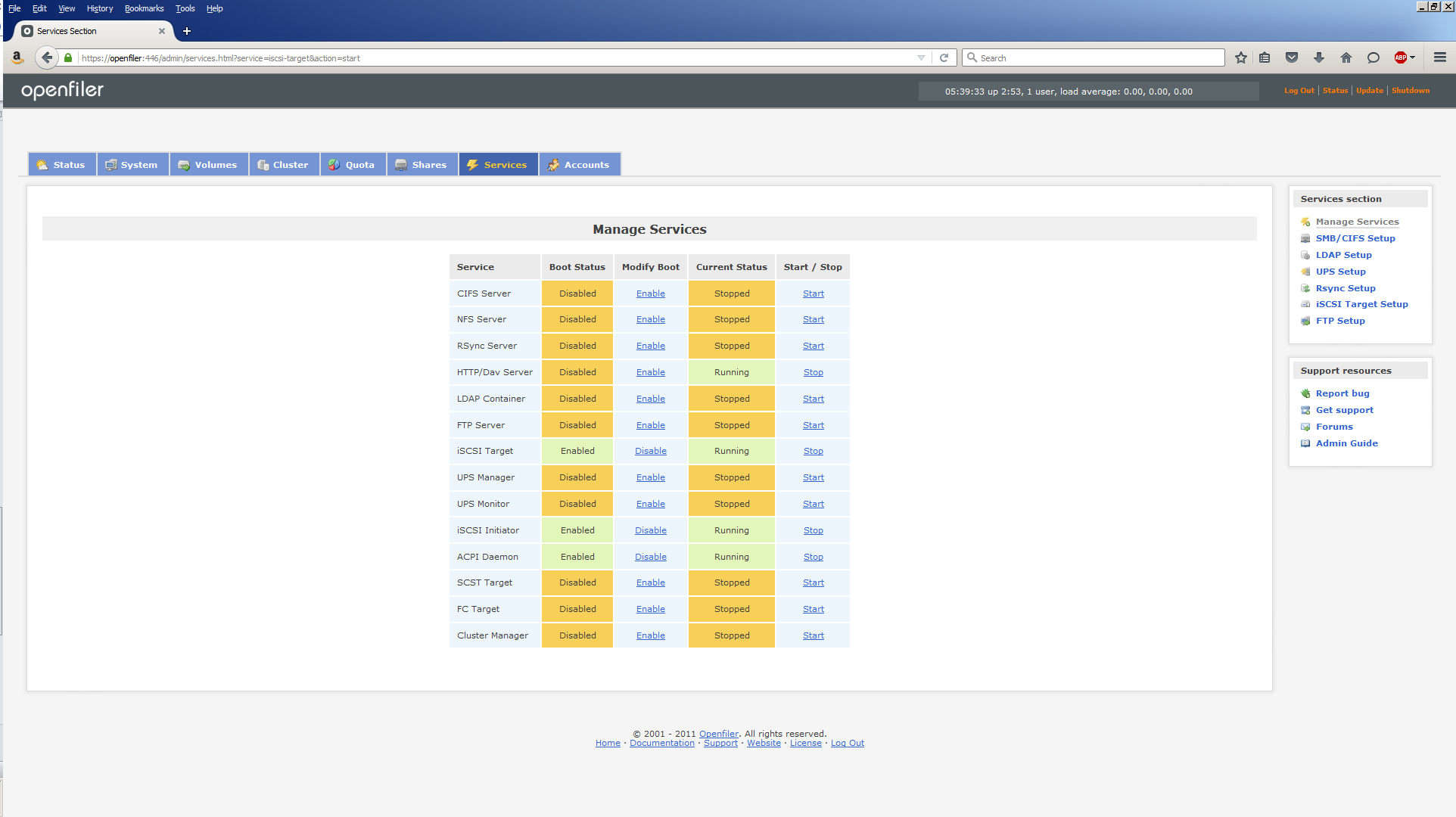

On the status screen, click Services to go to the Manage Services page:

For iSCSI target, click Enable and Start. For iSCSI Initiator, click Enable and start. The screen should now look like this:

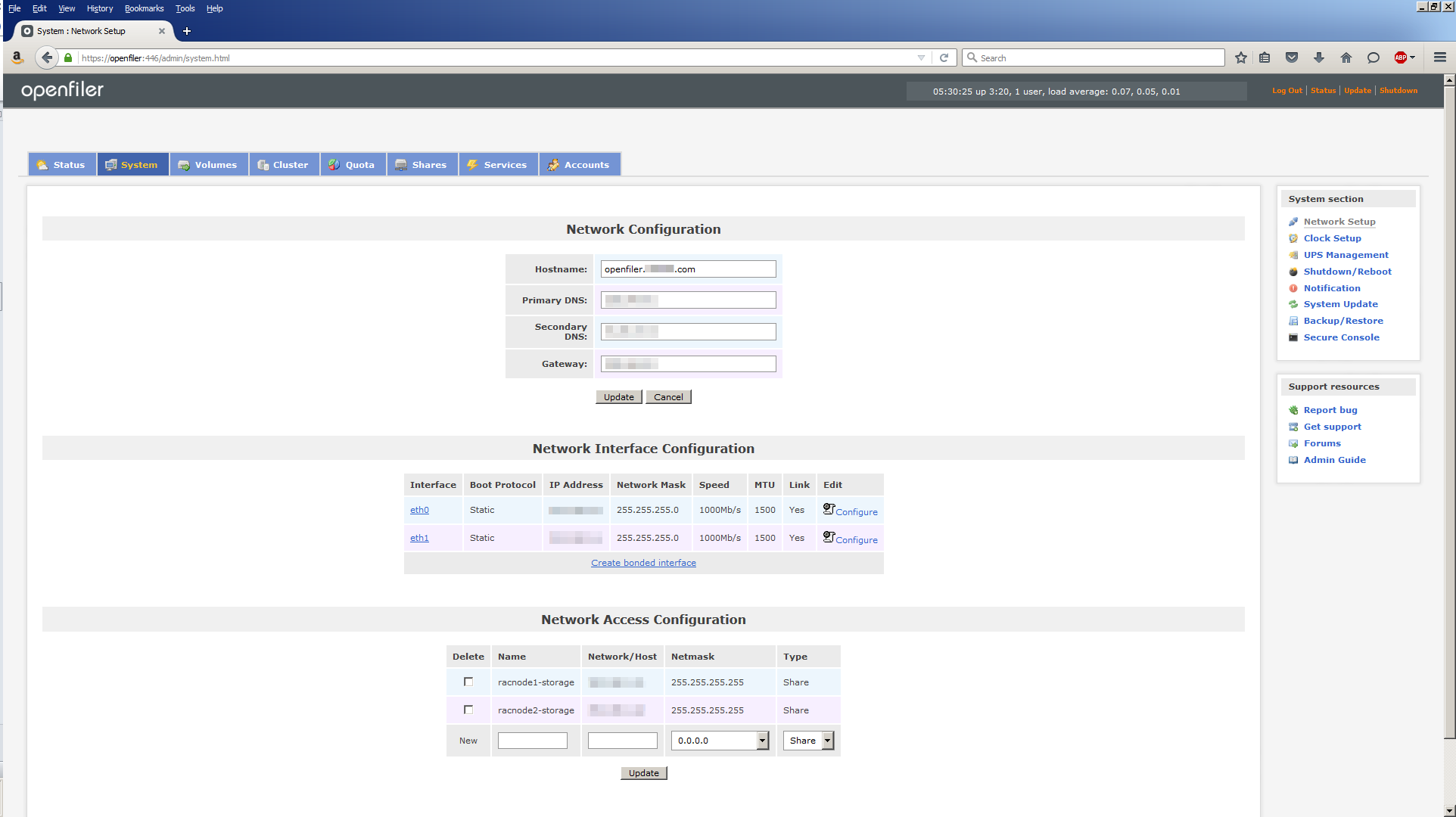

Task #2c: Network Access Configuration.

Click on System to display the networking configuration. Use the following values to to populate the Network Access Configuration section:

| Name | Network/Host | Netmask | Type |

|---|---|---|---|

| racnode1-storage | 200.200.20.11 | 255.255.255.255 | Share |

| racnode2-storage | 200.200.20.12 | 255.255.255.255 | Share |

The screen should now look similar to this:

Note, it’s tempting to put the hostname in the Network/Host column especially if you’ve added the correct entries in the Openfiler server’s /etc/hosts file. However, don’t! It doesn’t work when you come to discover the storage from the Oracle RAC nodes. You MUST use the IP address!

Task #2d: Partition the Disk.

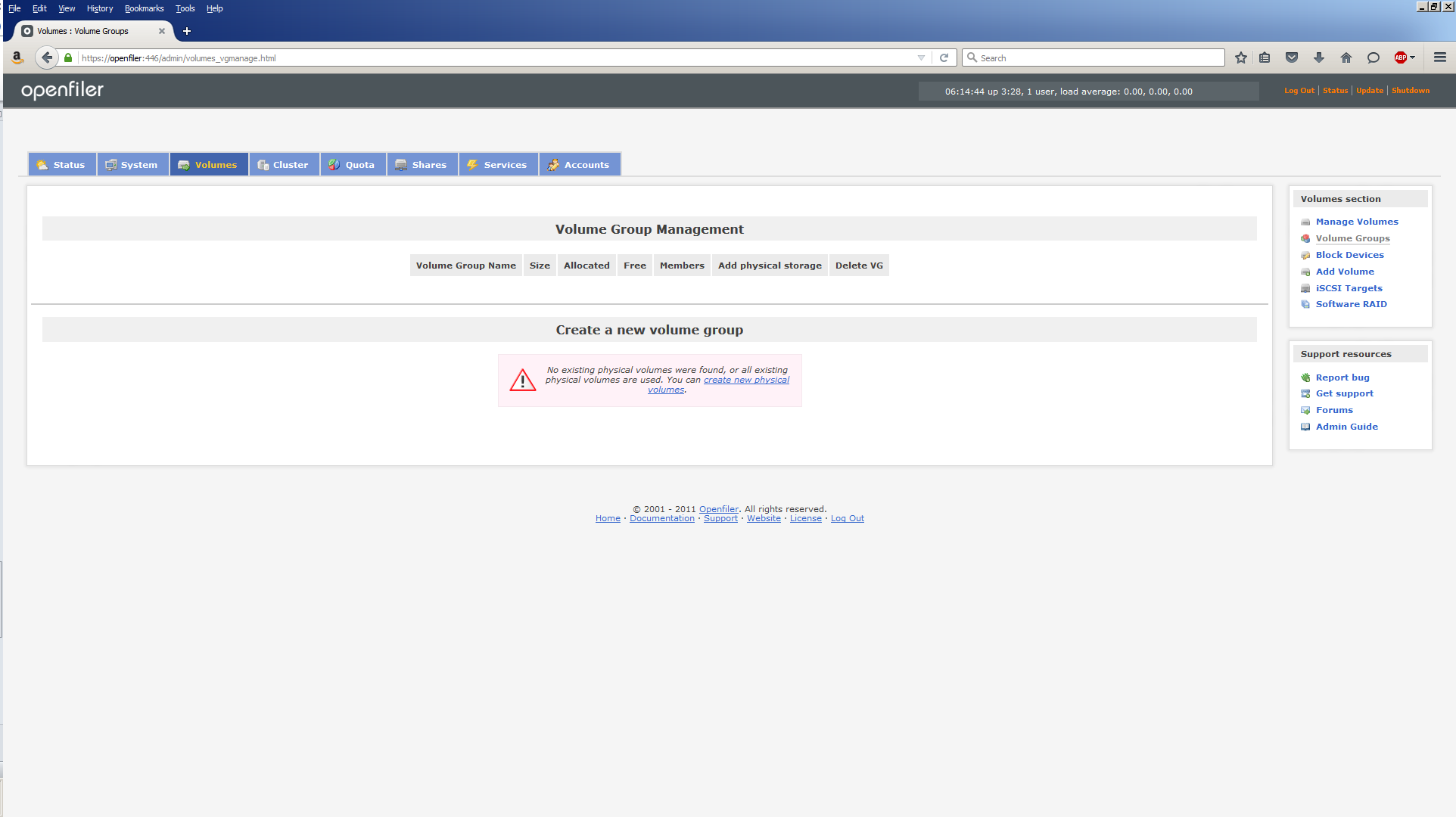

Click Volumes to display the Volume Group Management screen which currently shows no volume groups having been created:

Click Block Devices which shows the physical disks attached to the server:

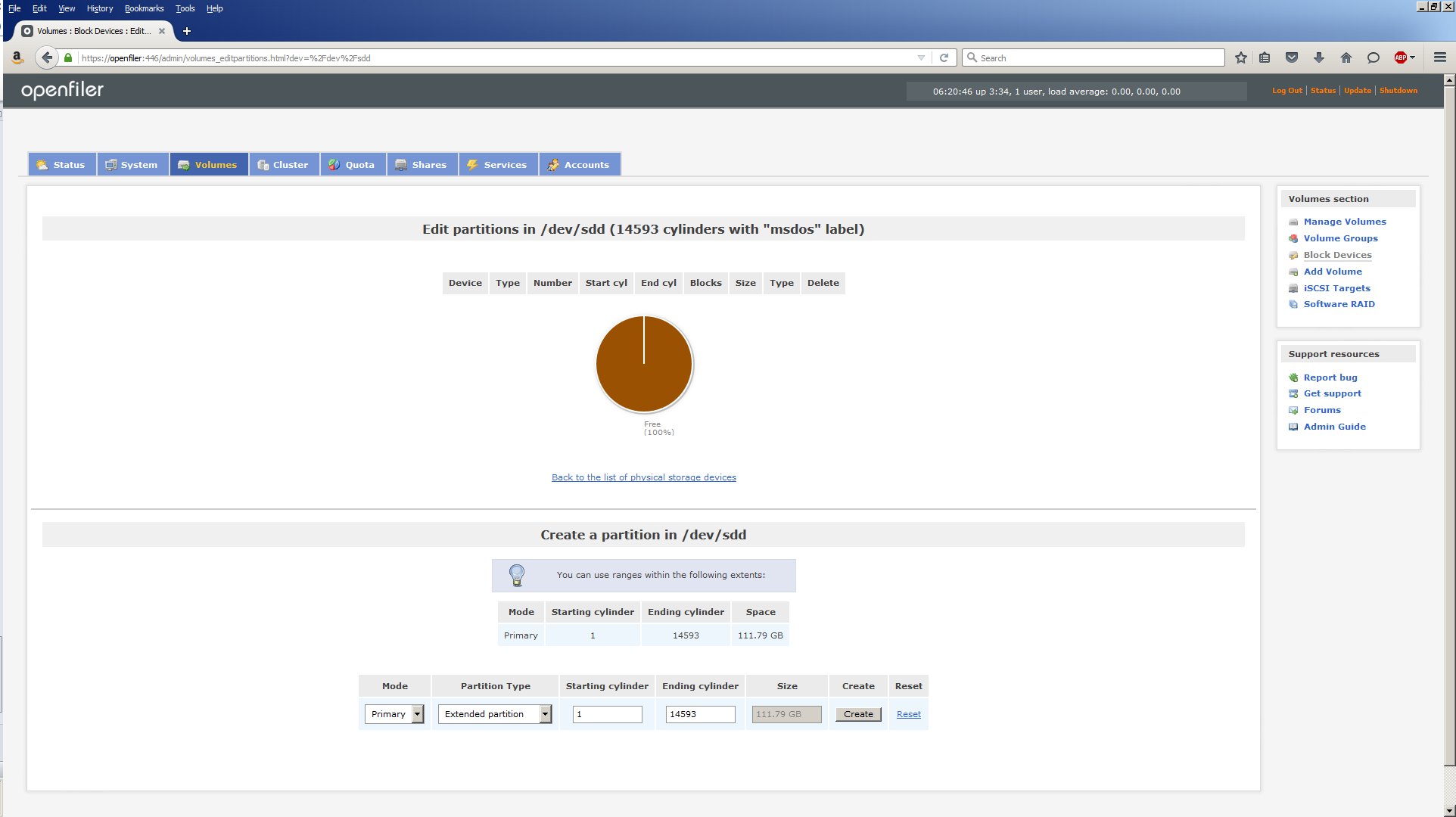

The /dev/sda device is where Openfiler is installed. For the first Oracle RAC cluster, we’ll be using the other Samsung SSD, /dev/sdd. Click on /dev/sdd:

In the Create a partition in /dev/sdd section, use these values then click Create:

| Field | Value |

|---|---|

| Mode | Primary |

| Partition Type | Physical Volume |

| Starting Cylinder | 1 |

| Ending Cylinder | 14593 |

The screen should now look like this:

Note, despite the values you used, Openfiler insists on keeping 5% of the disk free.

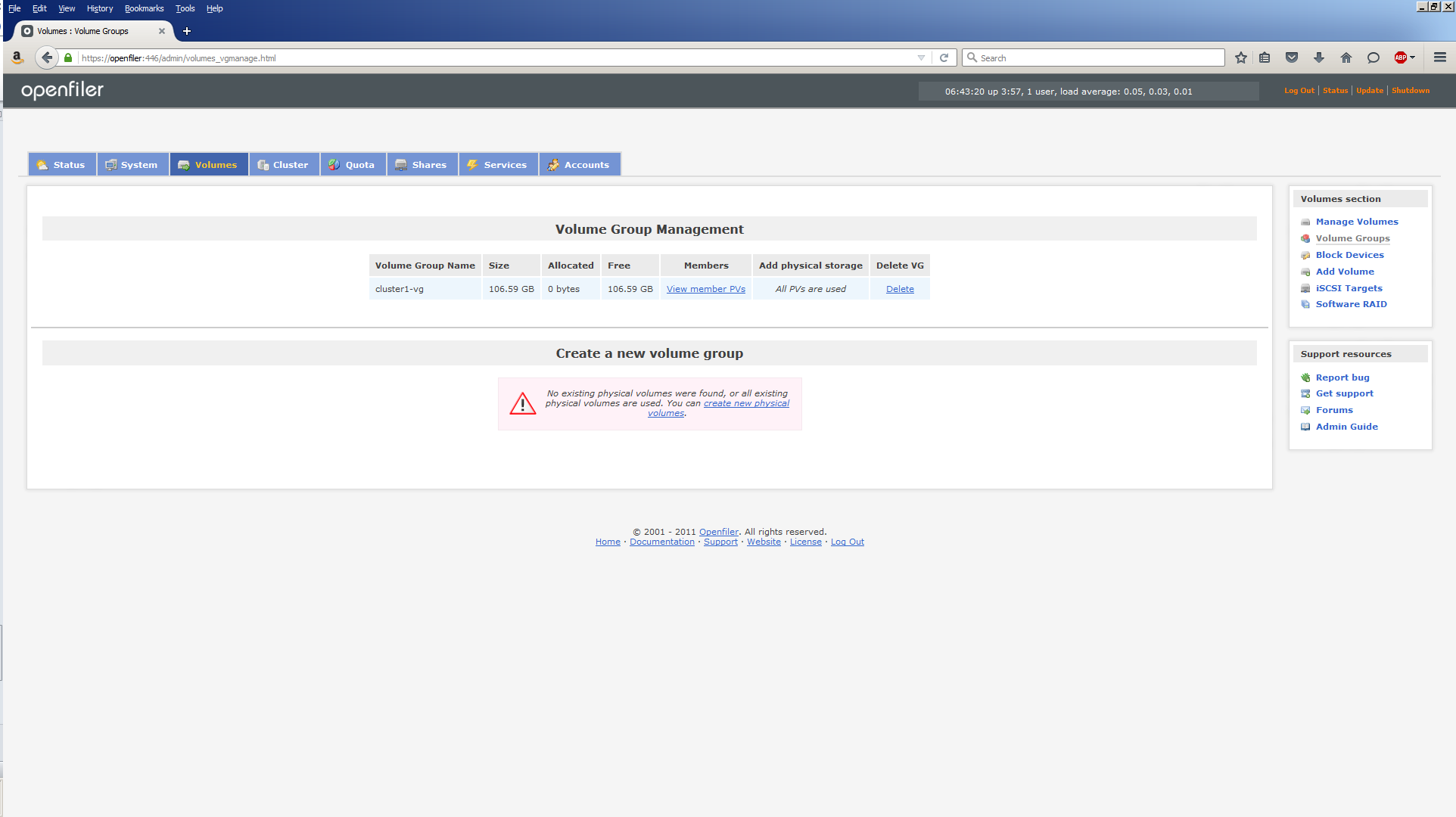

Task #2e: Create a Volume Group.

Click Volume Groups. Enter a new volume group name, cluster1-vg and check the box to select the /dev/sdd1 partition:

Then click Add volume group and the screen display changes to this:

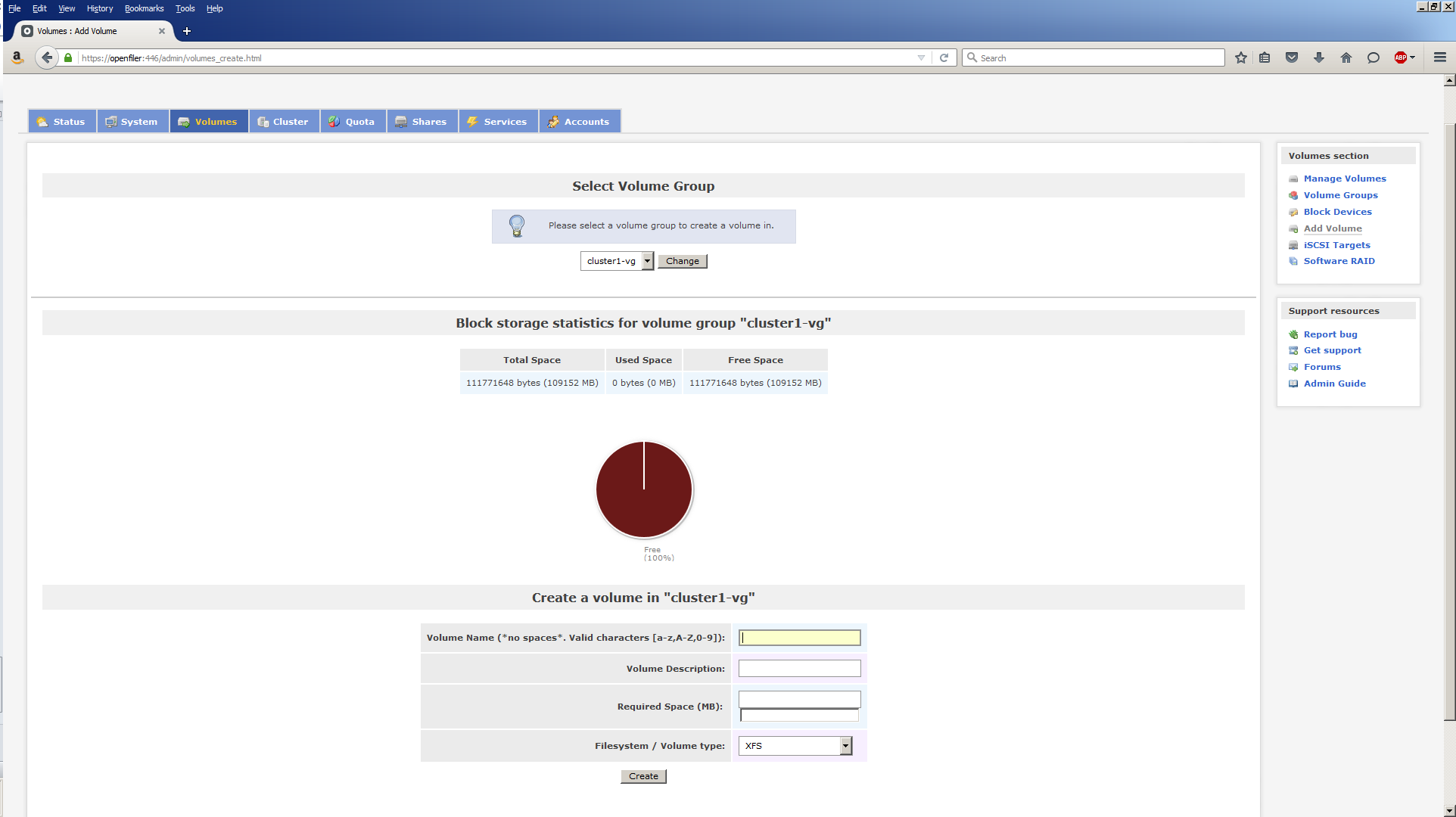

Task #2f: Create Logical Volumes.

The logical volumes we create will eventually end up as ASM disks visible to the Oracle RAC node servers.Click Add Volume.

We can now use this screen to create volumes within the cluster1-vg volume group. Use the following values to create 10 x 10 GB volumes:

| Volume Name | Volume Description | Required Space (MB) | Filesystem / Volume type |

|---|---|---|---|

| c1vg-vol01 | Cluster1 VG Volume #1 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol02 | Cluster1 VG Volume #2 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol03 | Cluster1 VG Volume #3 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol04 | Cluster1 VG Volume #4 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol05 | Cluster1 VG Volume #5 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol06 | Cluster1 VG Volume #6 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol07 | Cluster1 VG Volume #7 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol08 | Cluster1 VG Volume #8 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol09 | Cluster1 VG Volume #9 | 10240 | block (iSCSI,FC,etc) |

| c1vg-vol10 | Cluster1 VG Volume #10 | 10240 | block (iSCSI,FC,etc) |

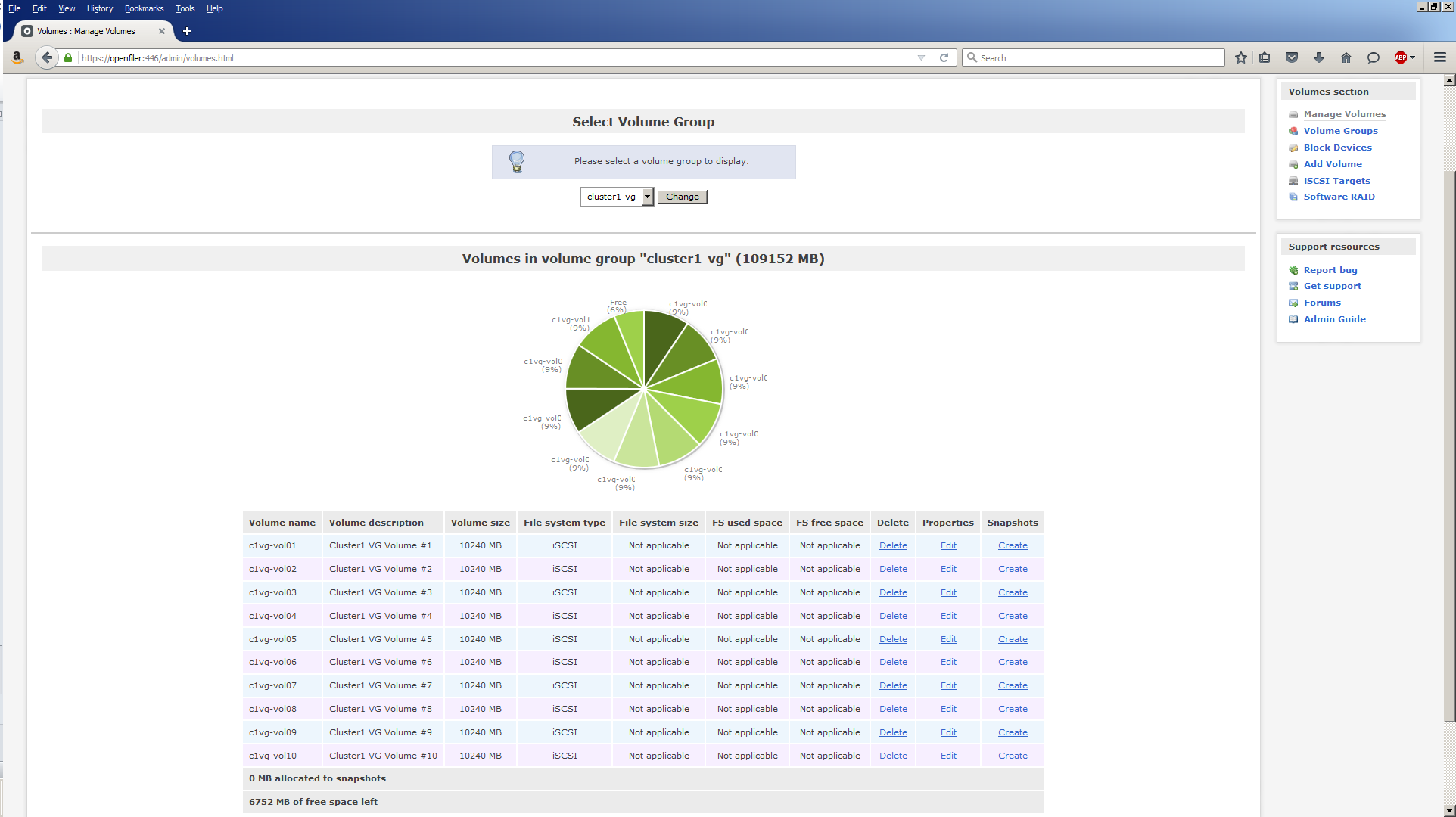

When you’re done, your screen should look similar to this:

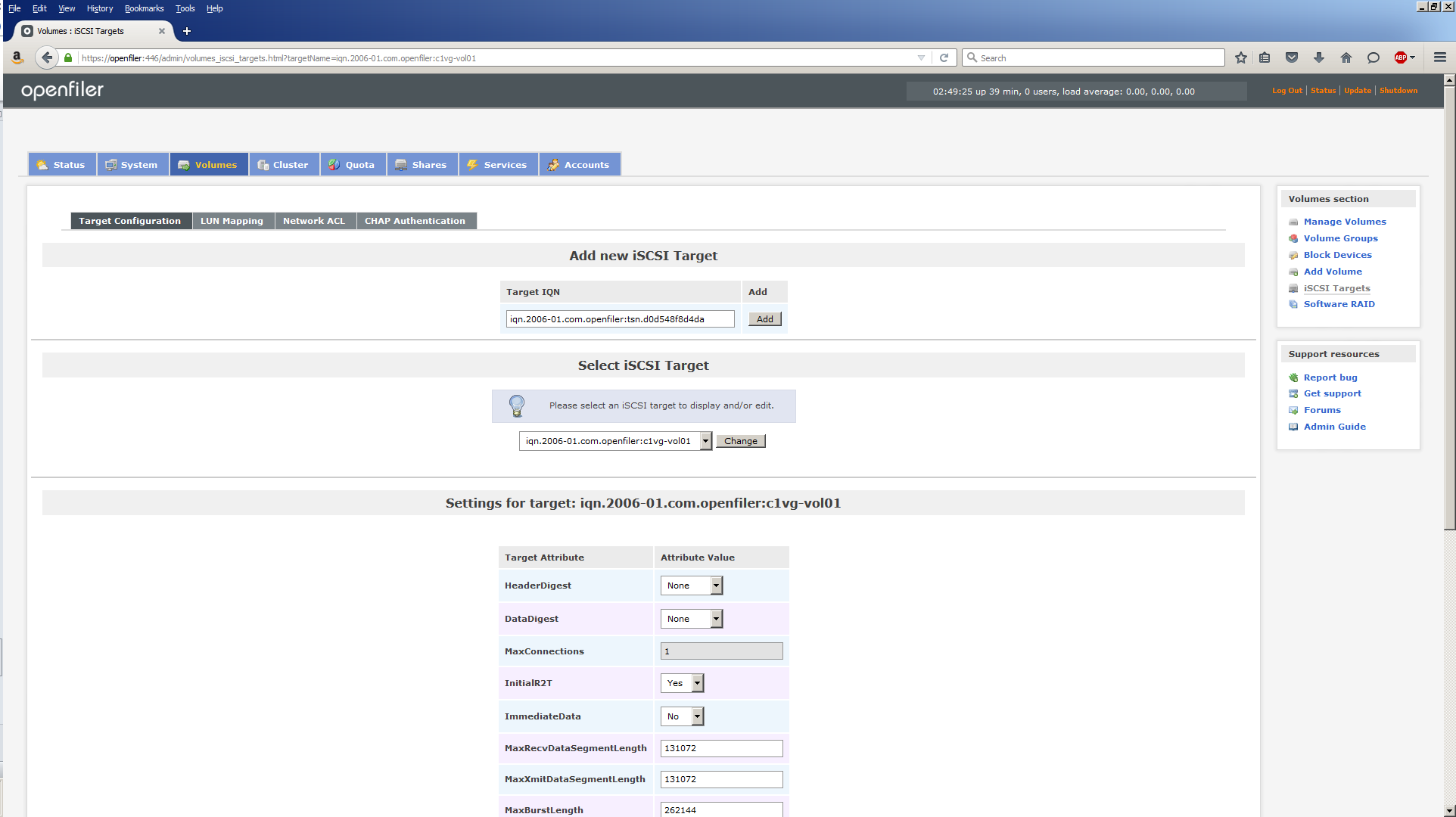

Task #2g: Create iSCSI Targets for Logical Volumes.

An iSCSI target is a way of identifying the logical volumes. Since we have 10 logical volumes, we’ll have 10 iSCSI targets. An iSCSI target is actually identified by a Target IQN or iSCSI Qualified Name. The following procedure will need to be repeated for each of the 10 logical volumes.

Click Volumes, then iSCSI Targets.

The default Target IQNs are not very meaningful, so we’ll add some meaning by changing their names according to the values below:

| Logical Volume Name | Target IQN |

|---|---|

| c1vg-vol01 | iqn.2006-01.com.openfiler:c1vg-vol01 |

| c1vg-vol02 | iqn.2006-01.com.openfiler:c1vg-vol02 |

| c1vg-vol03 | iqn.2006-01.com.openfiler:c1vg-vol03 |

| c1vg-vol04 | iqn.2006-01.com.openfiler:c1vg-vol04 |

| c1vg-vol05 | iqn.2006-01.com.openfiler:c1vg-vol05 |

| c1vg-vol06 | iqn.2006-01.com.openfiler:c1vg-vol06 |

| c1vg-vol07 | iqn.2006-01.com.openfiler:c1vg-vol07 |

| c1vg-vol08 | iqn.2006-01.com.openfiler:c1vg-vol08 |

| c1vg-vol09 | iqn.2006-01.com.openfiler:c1vg-vol09 |

| c1vg-vol10 | iqn.2006-01.com.openfiler:c1vg-vol10 |

After changing the Target IQN, click the Add button. The screen display changes to this:

Ignore the Settings section as we won’t be changing any of those values.

Context is extremely important at this stage and you need to make sure your focus is on the correct object. Therefore, make sure you select the Target IQN you just created from the Select iSCSI Target menu. If it isn’t, as will be the case after you create the first one, select the Target IQN you need, then click the Change button. Selecting the Target IQN from the menu isn’t sufficient. You MUST click the Change button to make it the ‘active’ Target IQN.

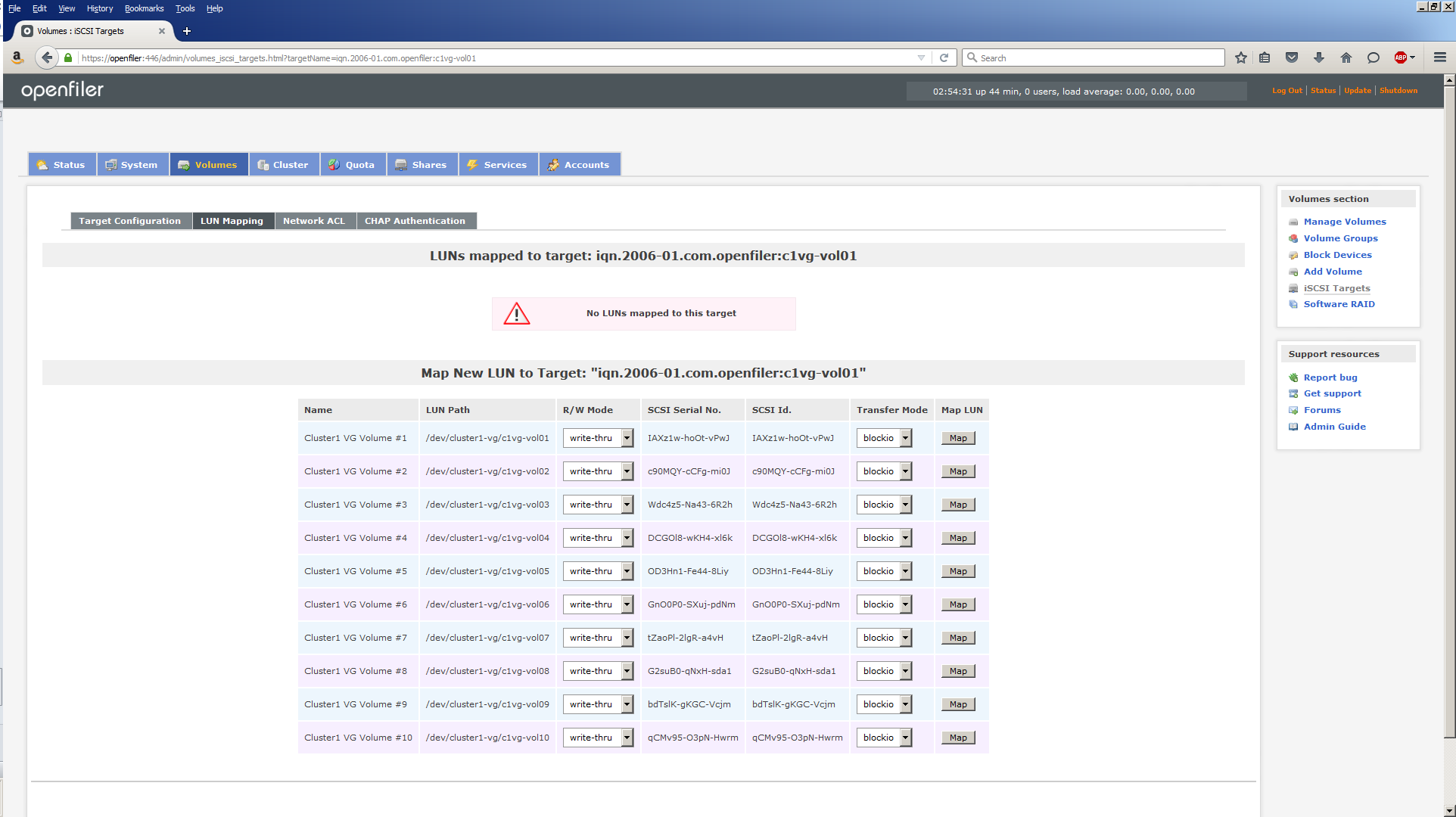

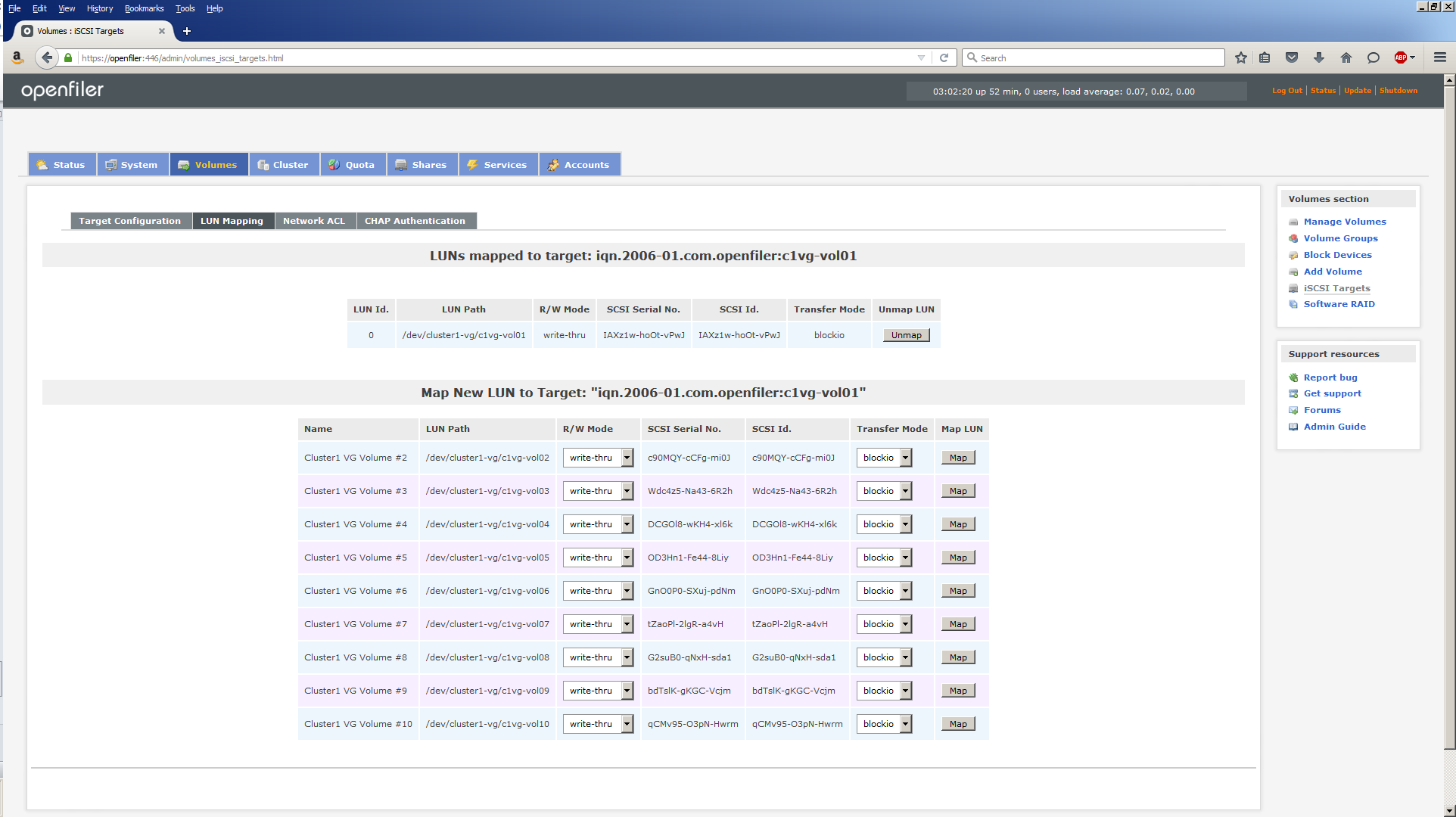

Click LUN Mapping. The screen changes to this:

Click the Map button next to the Logical Volume you wish to map the currently active Target IQN to. First time through, the Map New LUN to Target will show “iqn.2006-01.com.openfiler:c1vg-vol01” and click the Map button on the line beginning, Cluster 1 VG Volume #1. The screen changes to this:

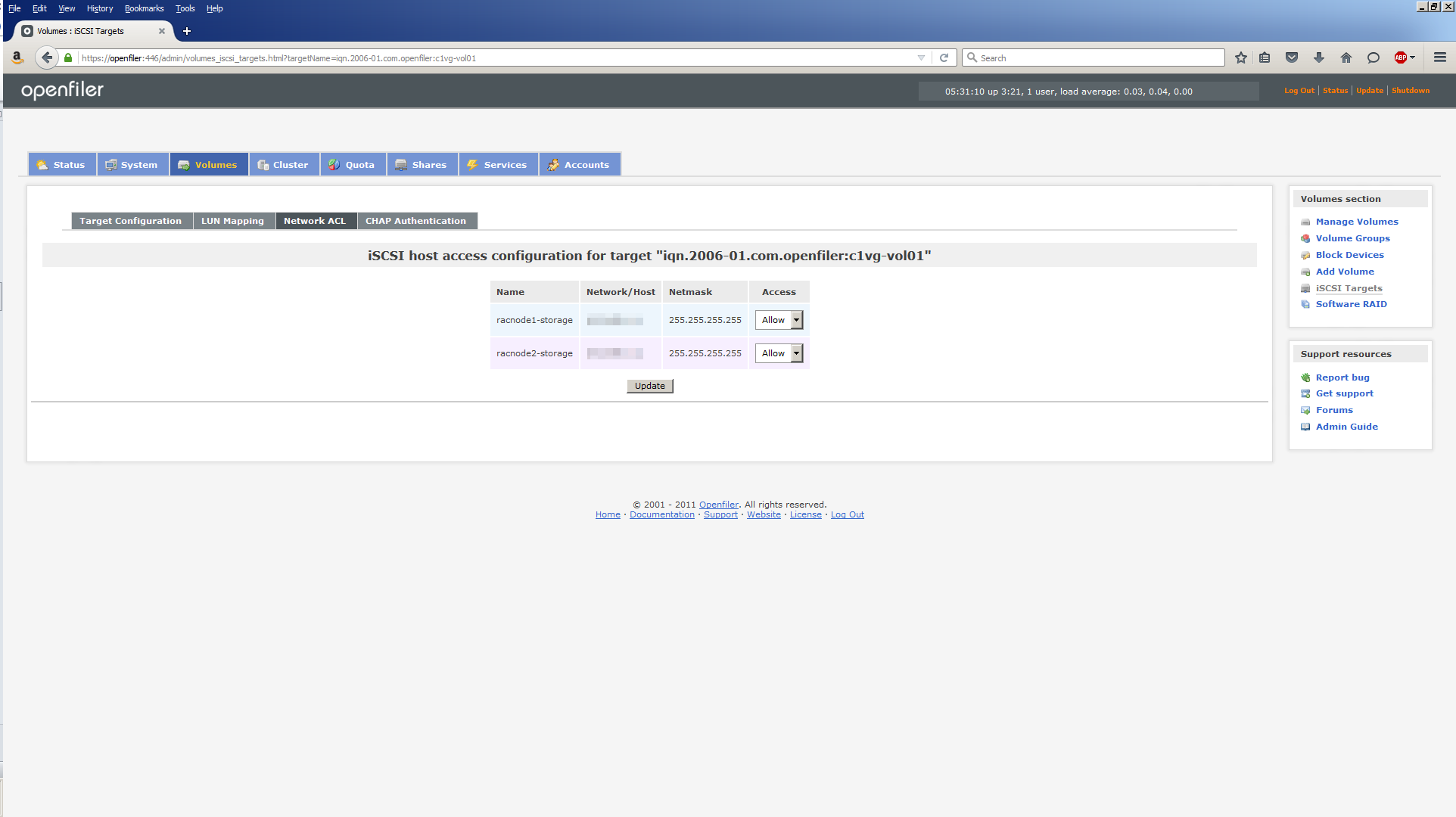

Next, click Network ACL (Access Control List). Here you define which hosts (Oracle RAC nodes) can have access to this Target IQN. Since Openfiler only knows about two nodes on the storage network (200.200.20.x), racnode1-storage and racnode2-storage are listed.

Use the Access menu to change Deny to Allow. Click Update. The screen will refresh but nothing else happens which can be a little deceiving. As long as you hit Update and the screen refreshes (don’t blink or you’ll miss it), you’re fine. Click Target Configuration to go back to this screen and repeat the procedure for c1vg-vol02 through c1vg-vol10.

Task #2h: Review iSCSI targets.

Once you’re done creating a Target IQN, LUN Mapping and Network ACL for all 10 logical volumes, you can check your handiwork. Return to the home page by clicking Status, then click iSCSI Targets:

Task #3: Configure iSCSI Targets in Oracle Linux.

Our attention now turns to making the iSCSI targets visible and usable on the Oracle RAC node servers. To do that, we use the iSCSI initiator client which we installed back in Part 7. There are 6 steps in all, so here we go.

Task #3a: Verify iSCSI client (ALL nodes).

Verify the iSCSI client is installed on both Oracle RAC nodes:

[root@racnode1 ~]# rpm -qa | grep iscsi iscsi-initiator-utils-6.2.0.873-14.0.1.el6.x86_64

[root@racnode2 ~]# rpm -qa | grep iscsi iscsi-initiator-utils-6.2.0.873-14.0.1.el6.x86_64

Then configure the iSCSI client to start on boot up on both Oracle RAC nodes:

[root@racnode1 ~]# chkconfig iscsid on [root@racnode1 ~]# chkconfig iscsi on

[root@racnode2 ~]# chkconfig iscsid on [root@racnode2 ~]# chkconfig iscsi on

Trying to start the services has little effect at this stage, but running the iscsiadm discovery command makes everything spring into life. You’ll see.

Perform this step on all nodes in the cluster.

Task #3b: Discover iSCSI targets (ALL nodes).

The iscsiadm discovery command starts the iscsid service and then discovers the iSCSI targets coming from Openfiler.

[root@racnode1 ~]# iscsiadm -m discovery -t sendtargets -p openfiler-storage Starting iscsid: [ OK ] 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol10 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol10 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol09 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol09 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol08 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol08 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol07 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol07 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol06 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol06 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol05 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol05 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol04 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol04 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol03 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol03 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol02 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol02 200.200.20.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol01 200.200.10.6:3260,1 iqn.2006-01.com.openfiler:c1vg-vol01

Note, we’re using the portal openfiler-storage (the -p option) which is on the storage network (200.200.20.x). However, the discovery output lists targets on the storage network AND the public network (200.200.10.x). I think this is an Openfiler quirk/bug. Needless to say, we’re only interested in the targets on the storage network.

Repeat this step on ALL nodes in the cluster.

Task #3c: Login to iSCSI targets (ALL nodes).

Using the iSCSI target name returned by the discovery command, use the iscsiadm command to log into each iSCSI target, making sure you reference the storage network IP addresses (200.200.20.x):

[root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol01 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol01, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol01, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol02 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol02, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol02, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol03 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol03, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol03, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol04 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol04, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol04, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol05 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol05, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol05, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol06 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol06, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol06, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol07 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol07, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol07, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol08 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol08, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol08, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol09 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol09, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol09, portal: 200.200.20.6,3260] successful. [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol10 -p 200.200.20.6 -l Logging in to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol10, portal: 200.200.20.6,3260] (multiple) Login to [iface: default, target: iqn.2006-01.com.openfiler:c1vg-vol10, portal: 200.200.20.6,3260] successful.

This has the effect of Oracle Linux recognizing the iSCSI targets as disk devices and assigns them a device name to each one. A partial fdisk -l listing confirms what has happened:

Disk /dev/sda: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdb: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdc: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdd: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sde: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdf: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdg: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdh: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdi: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdj: 10.7 GB, 10737418240 bytes 64 heads, 32 sectors/track, 10240 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000

By checking the entries in /dev/disk/by-path, we can determine the mapping between the iSCSI target and the local disk device:

[root@racnode1 by-path]# pwd /dev/disk/by-path [root@racnode1 by-path]# ls -l | grep iscsi lrwxrwxrwx 1 root root 9 Dec 16 15:33 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol01-lun-0 -> ../../sda lrwxrwxrwx 1 root root 9 Dec 16 15:33 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol02-lun-0 -> ../../sdb lrwxrwxrwx 1 root root 9 Dec 16 15:33 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol03-lun-0 -> ../../sdc lrwxrwxrwx 1 root root 9 Dec 16 15:33 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol04-lun-0 -> ../../sdd lrwxrwxrwx 1 root root 9 Dec 16 15:34 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol05-lun-0 -> ../../sde lrwxrwxrwx 1 root root 9 Dec 16 15:34 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol06-lun-0 -> ../../sdf lrwxrwxrwx 1 root root 9 Dec 16 15:34 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol07-lun-0 -> ../../sdg lrwxrwxrwx 1 root root 9 Dec 16 15:35 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol08-lun-0 -> ../../sdh lrwxrwxrwx 1 root root 9 Dec 16 15:35 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol09-lun-0 -> ../../sdi lrwxrwxrwx 1 root root 9 Dec 16 15:35 ip-200.200.20.6:3260-iscsi-iqn.2006-01.com.openfiler:c1vg-vol10-lun-0 -> ../../sdj

Repeat this step on ALL nodes in the cluster.

Task #3d: Configure automatic iscsi client login (ALL nodes).

To ensure the iscsi client on each node logs into the iSCSI targets each time the system is booted or the iscsid service is restarted, automatic login must be configured. To do that, run these commands, again making sure you reference the storage network IP addresses (200.200.20.x):

[root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol01 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol02 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol03 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol04 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol05 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol06 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol07 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol08 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol09 -p 200.200.20.6 --op update -n node.startup -v automatic [root@racnode1 scripts]# iscsiadm -m node -T iqn.2006-01.com.openfiler:c1vg-vol10 -p 200.200.20.6 --op update -n node.startup -v automatic

Note, these commands do not produce any output. Which is a little unfriendly, but don’t be scared!

Repeat this step on ALL nodes in the cluster.

Task #3e: Partition the disks (ONE node only).

Now that the iSCSI targets coming from the Openfiler shared storage server are visible as disk devices, we need to partition them. Each drive will have a single partition. Repeat the following command for /dev/sda through /dev/sdj:

[root@racnode1 scripts]# fdisk /dev/sda

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0xdce55f18.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-10240, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-10240, default 10240):

Using default value 10240

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

Note, partitioning the disk devices is only done from ONE node.

Task #3f: Configure persistent device names for iSCSI targets (ALL nodes).

The discovery of the iSCSI targets by Oracle Linux just happened to map c1vg-vol01-lun-0 to /dev/sda, c1vg-vol02-lun-0 to /dev/sdb and so on. After the next reboot, these mappings may change. We need to avoid that and instead have a consistent iSCSI target to disk device mapping each time the server starts. This is achieved using an Oracle Linux device mapping utility called udev.

Note, using Oracle’s ASM library driver (Task #4 Steps #1 & #2) can also achieve the same thing udev does, i.e. a consistent mapping between iSCSI targets and disk devices.

Thus, configuring udev is, strictly speaking, unnecessary.

That said, you can use udev or ASMlib or both if you want to. This procedure uses both, just to demonstrate both ways of doing the same thing, one with Oracle’s help (ASMlib) and one without (udev).

Fortunately, each iSCSI target has a unique ID which remains consistent across reboots. So we need to find out what that is. Repeat the following command for /dev/sda through /dev/sdj, making a note of the output for each disk device:

[root@racnode1 ~]# scsi_id -g -u -d /dev/sda 14f504e46494c45524941587a31772d686f4f742d7650774a

Next, create a udev rules script called 99-oracle-iscsi.rules and place it in the /etc/udev/rules.d directory where udev can find it.

Note, the 99 signifies the order in which the script will run. Lower numbered scripts run first. The “oracle-iscsi” part is just our way of identifying the script as being related to Oracle. The “.rules” part makes it a rules script.

The rules script will contain 10 lines, one for each disk device. Each line will look like this:

KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="14f504e46494c45524941587a31772d686f4f742d7650774a", NAME="iscsi/asm-disk01", OWNER="root", GROUP="disk", MODE="0660"

Only two entries need to be changed for each disk device. The RESULT parameter needs to be set to the ID output by the scsi_id command for each disk device. The NAME parameter needs to be set to the naming convention you wish to follow. In the above example, I’m using iscsi/asm-disk01 for /dev/sda. The NAME parameter for /dev/sdb will therefore be, iscsi/asm-disk02 and so on.

Once the file is saved, it should have this ownership and permissions:

[root@racnode1 rules.d]# pwd /etc/udev/rules.d [root@racnode1 rules.d]# ls -l | grep oracle -rw-r--r-- 1 root root 2030 Dec 16 17:06 99-oracle-iscsi.rules

To test the udev rules script, run this command for each disk device (/block/sda/sda1 through /block/sdj/sdj1):

[root@racnode1 ~]# udevadm test /block/sda/sda1

Then check the /dev/iscsi directory and the persistent iscsi disk devices should be listed:

[root@racnode1 iscsi]# pwd /dev/iscsi [root@racnode1 iscsi]# ls -l brw-rw---- 1 root disk 8, 1 Dec 16 17:12 asm-disk01 brw-rw---- 1 root disk 8, 17 Dec 16 17:16 asm-disk02 brw-rw---- 1 root disk 8, 33 Dec 16 17:19 asm-disk03 brw-rw---- 1 root disk 8, 49 Dec 16 17:20 asm-disk04 brw-rw---- 1 root disk 8, 65 Dec 16 17:20 asm-disk05 brw-rw---- 1 root disk 8, 81 Dec 16 17:20 asm-disk06 brw-rw---- 1 root disk 8, 97 Dec 16 17:20 asm-disk07 brw-rw---- 1 root disk 8, 113 Dec 16 17:21 asm-disk08 brw-rw---- 1 root disk 8, 129 Dec 16 17:21 asm-disk09 brw-rw---- 1 root disk 8, 145 Dec 16 17:21 asm-disk10

Repeat this step for ALL nodes in the cluster.

Now reboot ALL nodes.

Task #4: Configure Oracle ASMlib.

Only a couple of steps to follow here to configure ASMLib.

Task #4a: Configure Oracle ASM library driver (ALL nodes).

Verify the oracleasm packages are installed:

[root@racnode1 scripts]# rpm -qa | grep oracleasm oracleasmlib-2.0.12-1.el6.x86_64 oracleasm-support-2.1.8-1.el6.x86_64

Then, as the root user, run these commands:

[root@racnode1 ~]# oracleasm configure -i

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting <ENTER> without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: grid

Default group to own the driver interface []: asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

[root@racnode1 ~]# oracleasm init

Creating /dev/oracleasm mount point: /dev/oracleasm

Loading module "oracleasm": oracleasm

Configuring "oracleasm" to use device physical block size

Mounting ASMlib driver filesystem: /dev/oracleasm

Repeat this step for ALL nodes in the cluster.

Task #4b: Create ASM disks (ONE node only).

Using the oracleasm createdisk command, create an ASM disk for each iSCSI device created in Task #3 Step #6 (/dev/iscsi/asm-disk01 through /dev/iscsi/asm-disk10):

[root@racnode1 disks]# oracleasm createdisk ASMDISK01 /dev/iscsi/asm-disk01 Writing disk header: done Instantiating disk: done

Note, if you chose not to use udev, then the command to create an ASM disk using a block device would be:

[root@racnode1 disks]# oracleasm createdisk ASMDISK01 /dev/sda1

Note, the remnants of ASM disk headers can be left behind even when a drive has been re-partitioned. This can prevent the new ASM disk from being instantiated. You will see this when that happens:

[root@racnode1 disks]# oracleasm createdisk ASMDISK01 /dev/iscsi/asm-disk01 Device "/dev/iscsi/asm-disk01" is already labeled for ASM disk "ASMDISK01"

If you see this, you need to destroy the old disk header. A simple way to do that is documented here. Once the header is wiped, the oracleasm createdisk command can create a new one. Once all the ASM disks have been created, they can be listed using this command:

[root@racnode1 disks]# oracleasm listdisks ASMDISK01 ASMDISK02 ASMDISK03 ASMDISK04 ASMDISK05 ASMDISK06 ASMDISK07 ASMDISK08 ASMDISK09 ASMDISK10

They can also be seen at the OS level.

Note, the user and group ownership is grid:asmadmin:

[root@racnode1 disks]# pwd /dev/oracleasm/disks [root@racnode1 disks]# ls -l brw-rw---- 1 grid asmadmin 8, 1 Dec 16 18:31 ASMDISK01 brw-rw---- 1 grid asmadmin 8, 17 Dec 16 18:23 ASMDISK02 brw-rw---- 1 grid asmadmin 8, 33 Dec 16 18:23 ASMDISK03 brw-rw---- 1 grid asmadmin 8, 49 Dec 16 18:23 ASMDISK04 brw-rw---- 1 grid asmadmin 8, 65 Dec 16 18:24 ASMDISK05 brw-rw---- 1 grid asmadmin 8, 81 Dec 16 18:26 ASMDISK06 brw-rw---- 1 grid asmadmin 8, 97 Dec 16 18:26 ASMDISK07 brw-rw---- 1 grid asmadmin 8, 113 Dec 16 18:26 ASMDISK08 brw-rw---- 1 grid asmadmin 8, 129 Dec 16 18:26 ASMDISK09 brw-rw---- 1 grid asmadmin 8, 145 Dec 16 18:26 ASMDISK10

Note, you can determine the mapping between the ASM disks and the block devices by examining the major and minor device numbers. For example, the major number of ASMDISK01 is 8 and its minor number is 1. Similarly, the major number for ASMDISK02 is also 8 and its minor number is 17. Compare the major and minor numbers with a directory listing of the block devices:

[root@racnode1 disks]# ls -l /dev/sd*1 brw-rw---- 1 root disk 8, 1 Jan 26 13:55 /dev/sda1 brw-rw---- 1 root disk 8, 17 Jan 26 13:55 /dev/sdb1 brw-rw---- 1 root disk 8, 33 Jan 26 13:55 /dev/sdc1 brw-rw---- 1 root disk 8, 49 Jan 26 13:55 /dev/sdd1 brw-rw---- 1 root disk 8, 65 Jan 26 13:55 /dev/sde1 brw-rw---- 1 root disk 8, 81 Jan 26 13:55 /dev/sdf1 brw-rw---- 1 root disk 8, 97 Jan 26 13:55 /dev/sdg1 brw-rw---- 1 root disk 8, 113 Jan 26 13:55 /dev/sdh1 brw-rw---- 1 root disk 8, 129 Jan 31 14:05 /dev/sdi1 brw-rw---- 1 root disk 8, 145 Jan 26 13:55 /dev/sdj1

So we can see that ASMDISK01 maps to /dev/sda1, ASMDISK02 maps to /dev/sdb1 and so on.

The other node will not see the ASM disks immediately. Use this command to instantiate the disks on racnode2:

[root@racnode2 ~]# oracleasm scandisks Reloading disk partitions: done Cleaning any stale ASM disks... Scanning system for ASM disks... Instantiating disk "ASMDISK08" Instantiating disk "ASMDISK10" Instantiating disk "ASMDISK05" Instantiating disk "ASMDISK09" Instantiating disk "ASMDISK07" Instantiating disk "ASMDISK01" Instantiating disk "ASMDISK04" Instantiating disk "ASMDISK02" Instantiating disk "ASMDISK03" Instantiating disk "ASMDISK06"

Note, for Oracle Database 12c there is a new mechanism called the ASM Filter Driver. Obviously we haven’t used that here. Maybe when we re-visit this section in the future we can migrate to using that.

So there you have it. Shared storage configured and ready to go. Everything is now in place for a successful install of the Grid Infrastructure and database software. See you in Part 10 for that.

If you have any comments or questions about this post, please use the Contact form here.